- Channel capacity

-

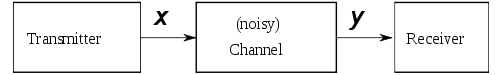

In electrical engineering, computer science and information theory, channel capacity is the tightest upper bound on the amount of information that can be reliably transmitted over a communications channel. By the noisy-channel coding theorem, the channel capacity of a given channel is the limiting information rate (in units of information per unit time) that can be achieved with arbitrarily small error probability.[1] [2]

Information theory, developed by Claude E. Shannon during World War II, defines the notion of channel capacity and provides a mathematical model by which one can compute it. The key result states that the capacity of the channel, as defined above, is given by the maximum of the mutual information between the input and output of the channel, where the maximization is with respect to the input distribution.[3]

Contents

Formal definition

Let X represent the space of signals that can be transmitted, and Y the space of signals received, during a block of time over the channel. Let

be the conditional distribution function of Y given X. Treating the channel as a known statistic system, pY | X(y | x) is an inherent fixed property of the communications channel (representing the nature of the noise in it). Then the joint distribution

of X and Y is completely determined by the channel and by the choice of

the marginal distribution of signals we choose to send over the channel. The joint distribution can be recovered by using the identity

Under these constraints, next maximize the amount of information, or the message, that one can communicate over the channel. The appropriate measure for this is the mutual information I(X;Y), and this maximum mutual information is called the channel capacity and is given by

Noisy-channel coding theorem

The noisy-channel coding theorem states that for any ε > 0 and for any rate R less than the channel capacity C, there is an encoding and decoding scheme that can be used to ensure that the probability of block error is less than ε for a sufficiently long code. Also, for any rate greater than the channel capacity, the probability of block error at the receiver goes to one as the block length goes to infinity.

Example application

An application of the channel capacity concept to an additive white Gaussian noise (AWGN) channel with B Hz bandwidth and signal-to-noise ratio S/N is the Shannon–Hartley theorem:

C is measured in bits per second if the logarithm is taken in base 2, or nats per second if the natural logarithm is used, assuming B is in hertz; the signal and noise powers S and N are measured in watts or volts2, so the signal-to-noise ratio here is expressed as a power ratio, not in decibels (dB); since figures are often cited in dB, a conversion may be needed. For example, 30 dB is a power ratio of 1030 / 10 = 103 = 1000.

Channel capacity in wireless communications

This section[4] focuses on the single-antenna, point-to-point scenario. For channel capacity in systems with multiple antennas, see the article on MIMO.

AWGN channel

If the average received power is

[W] and the noise power spectral density is N0 [W/Hz], the AWGN channel capacity is

[W] and the noise power spectral density is N0 [W/Hz], the AWGN channel capacity is [bits/Hz],

[bits/Hz],

where

is the received signal-to-noise ratio (SNR).

is the received signal-to-noise ratio (SNR).When the SNR is large (SNR >> 0 dB), the capacity

is logarithmic in power and approximately linear in bandwidth. This is called the bandwidth-limited regime.

is logarithmic in power and approximately linear in bandwidth. This is called the bandwidth-limited regime.When the SNR is small (SNR << 0 dB), the capacity

is linear in power but insensitive to bandwidth. This is called the power-limited regime.

is linear in power but insensitive to bandwidth. This is called the power-limited regime.The bandwidth-limited regime and power-limited regime are illustrated in the figure.

Frequency-selective channel

The capacity of the frequency-selective channel is given by so-called waterfilling power allocation,

where

and

and  is the gain of subchannel n, with λ chosen to meet the power constraint.

is the gain of subchannel n, with λ chosen to meet the power constraint.Slow-fading channel

In a slow-fading channel, where the coherence time is greater than the latency requirement, there is no definite capacity as the maximum rate of reliable communications supported by the channel, log 2(1 + | h | 2SNR), depends on the random channel gain | h | 2. If the transmitter encodes data at rate R [bits/s/Hz], there is a certain probability that the decoding error probability cannot be made arbitrarily small,

,

,

in which case the system is said to be in outage. With a non-zero probability that the channel is in deep fade, the capacity of the slow-fading channel in strict sense is zero. However, it is possible to determine the largest value of R such that the outage probability pout is less than

. This value is known as the

. This value is known as the  -outage capacity.

-outage capacity.Fast-fading channel

In a fast-fading channel, where the latency requirement is greater than the coherence time and the codeword length spans many coherence periods, one can average over many independent channel fades by coding over a large number of coherence time intervals. Thus, it is possible to achieve a reliable rate of communication of

[bits/s/Hz] and it is meaningful to speak of this value as the capacity of the fast-fading channel.

[bits/s/Hz] and it is meaningful to speak of this value as the capacity of the fast-fading channel.See also

- Bandwidth (computing)

- Bandwidth (signal processing)

- Bit rate

- Code rate

- Error exponent

- Nyquist rate

- Negentropy

- Redundancy

- Sender, Encoder, Decoder, Receiver

- Shannon–Hartley theorem

- Spectral efficiency

- Throughput

Advanced Communication Topics

References

- ^ Saleem Bhatti. "Channel capacity". Lecture notes for M.Sc. Data Communication Networks and Distributed Systems D51 -- Basic Communications and Networks. http://www.cs.ucl.ac.uk/staff/S.Bhatti/D51-notes/node31.html.

- ^ Jim Lesurf. "Signals look like noise!". Information and Measurement, 2nd ed.. http://www.st-andrews.ac.uk/~www_pa/Scots_Guide/iandm/part8/page1.html.

- ^ Thomas M. Cover, Joy A. Thomas (2006), Elements of Information Theory, John Wiley & Sons, New York

- ^ David Tse, Pramod Viswanath (2005), Fundamentals of Wireless Communication, Cambridge University Press, UK

Categories:- Information theory

- Telecommunication theory

- Television terminology

Wikimedia Foundation. 2010.

.

.